So-called artificial intelligence is a contentious topic for various reasons, including handling of copyright, power usage, privacy concerns and chatbots like ChatGPT sometimes returning incorrect answers to queries.

However, there’s one thing that critics and evangelists can agree on: AI increasingly permeates many layers of digital life, from culture to business to politics. As is common with new technologies, an increase of illicit usage of AI in the form of deepfakes is deeply connected to its rise in popularity and, more importantly, accessibility.

Deepfakes are AI-generated videos or audio formats that impersonate, for example, celebrities or politicians to spread mis- or disinformation or defraud consumers. Businesses around the world can already feel the impact of this type of artificial content.

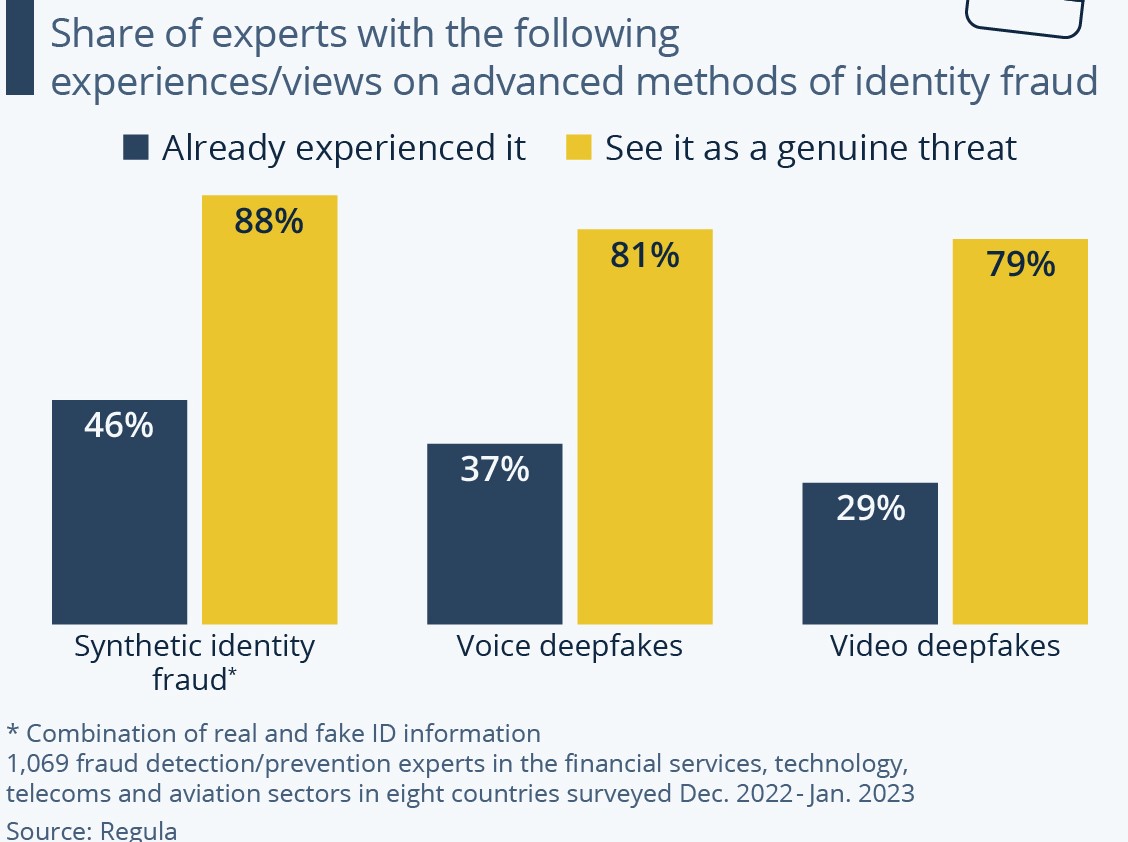

A survey by identity verification provider Regula conducted among more than 1,000 experts in fraud detection or prevention from countries like the United States, the United Kingdom, France and Germany shows that a sizable chunk of their companies were targeted by one of three methods of advanced identity fraud.

Forty-six percent of respondents experienced cases of synthetic identity fraud, where a combination of real and fake identity components, like a fake social security number and a real name, address and birth date, was used.

Thirty-seven percent reported seeing voice deepfakes being used, a recent high-profile example of which from the realm of politics was an artificial President Biden robocalling prospective voters in January to dissuade them from voting in the primaries.

Video deepfakes are, as of now, less common, with only 29 percent of respondents having already experienced such fraud attempts. With generative AI companies now focusing on moving pictures, with tools like OpenAI’s Sora, this problem could become more pronounced in the coming months and years.

This worry is also reflected in the share of experts surveyed who think these three fraud methods will become a genuine threat, with between 80 and 90 percent agreeing with this assessment.

“AI-generated fake identities can be difficult for humans to detect, unless they are specially trained to do so”, says Ihar Kliashchou, Chief Technology Officer at Regula. “While neural networks may be useful in detecting deepfakes, they should be used in conjunction with other antifraud measures that focus on physical and dynamic parameters.”

Florian Zandt contributed this from Statista